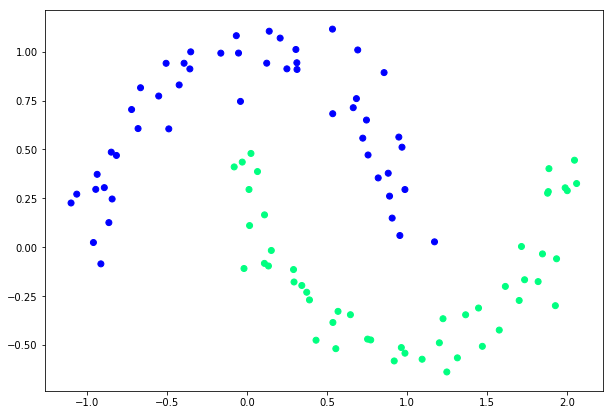

class: title-slide .row[ .col-7[ .title[ # Neural Networks using Make moons Dataset ] .subtitle[ ] .author[ ### Laxmikant Soni <br> [Web-Site](https://laxmikants.github.io) <br> [<i class="fab fa-github"></i>](https://github.com/laxmiaknts) [<i class="fab fa-twitter"></i>](https://twitter.com/laxmikantsoni09) ] .affiliation[ ] ] .col-5[ .logo[ <!-- --> ] ] ] --- class: inverse, center, middle # Make moons Dataset --- class: body # Make Moons Dataset <hr> -- .pull-top[ The make_moons dataset is a swirl pattern, or two moons. It is a set of points in 2D making two interleaving half circles. <!-- --> ] <hr> --- class: body # Importing libraries <hr> -- .pull-left[ ```python from sklearn import datasets import numpy as np import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split ``` ] -- .pull-right[ - numpy is used for scientific computing with Python. It is one of the fundamental packages you will use. - matplotlib .This library is used in Python for plotting graphs. ] <hr> --- class: body # Initializing the dataset <hr> -- .pull-left[ ```python np.random.seed(0) feature_set_x, labels_y = datasets.make_moons(100, noise=0.10) X_train, X_test, y_train, y_test = train_test_split(feature_set_x, labels_y, test_size=0.33, random_state=42) ``` ] -- .pull-right[  ] <hr> In the script above we import the datasets class from the sklearn library. To create non-linear dataset of 100 data-points, we use the make_moons method and pass it 100 as the first parameter. The method returns a dataset, which when plotted contains two interleaving half circles, as shown in the figure below.You can clearly see that this data cannot be separated by a single straight line, hence the perceptron cannot be used to correctly classify this data. --- class: body # Build the model <hr> -- .pull-left[ ```python from keras.models import Sequential from keras.layers import Dense, Activation model = Sequential() model.add(Dense(50, input_dim=2, activation='relu')) model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy', optimizer='adam', metrics= ['accuracy']) print(model.summary()) ``` ] -- .pull-right[ Model: "sequential_3" Layer (type) Output Shape Param # dense_5 (Dense) (None, 50) 150 dense_6 (Dense) (None, 1) 51 Total params: 201 Trainable params: 201 Non-trainable params: 0 -- ] <hr> The model summary gives the number of paramters input to the model. --- class: body # Train the model <hr> -- .pull-left[ ```python results = model.fit(X_train, y_train , nb_epoch=100) score = model.evaluate(X_test, y_test, verbose=0) print('Test score:', score[0]) print('Test accuracy:', score[1]) ``` ] -- .pull-right[ <font size="5"> - Test score: 0.2696294298768043 - Test accuracy: 0.8799999952316284 </font> ] <hr> The lower the loss, the better a model (unless the model has over-fitted to the training data). The loss is calculated on training and validation and its interperation is how well the model is doing for these two sets. Unlike accuracy, loss is not a percentage. It is a summation of the errors made for each example in training or validation sets. The accuracy of a model is usually determined after the model parameters are learned and fixed and no learning is taking place. Then the test samples are fed to the model and the number of mistakes (zero-one loss) the model makes are recorded, after comparison to the true targets. Then the percentage of misclassification is calculated. For example, if the number of test samples is 1000 and model classifies 875 of those correctly, then the model's accuracy is 87.5%. --- class: body # Make prediction <hr> -- .pull-left[ ```python prediction_values = model.predict_classes(X_test) ``` ] -- .pull-right[ <font size="5"> To predict different classes use model.predict_classes function. </font> -- ] <hr> --- class: inverse, center, middle # Thanks