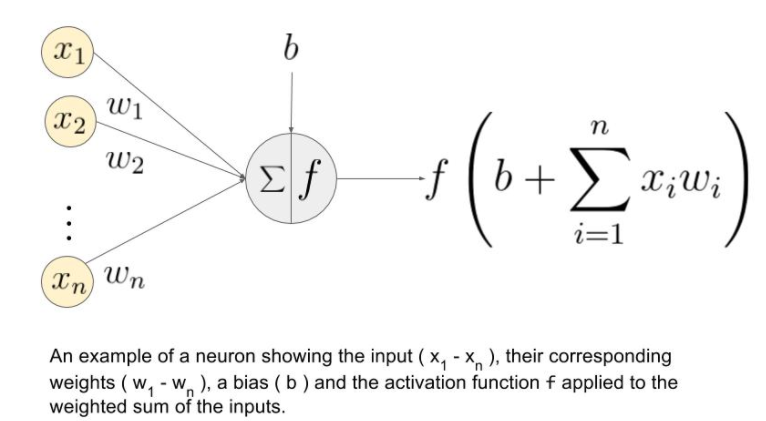

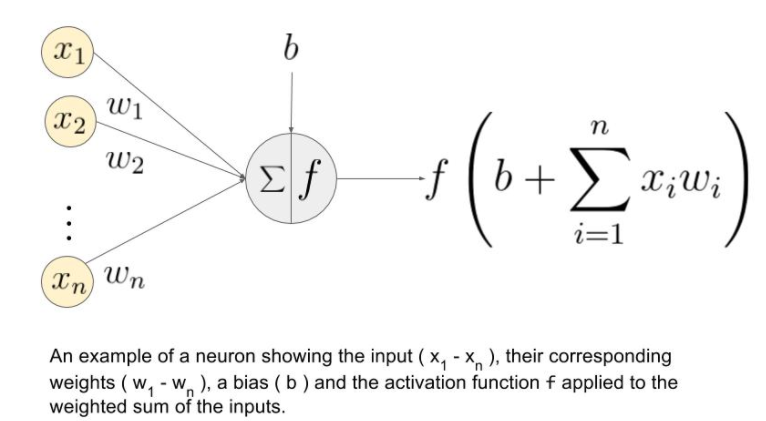

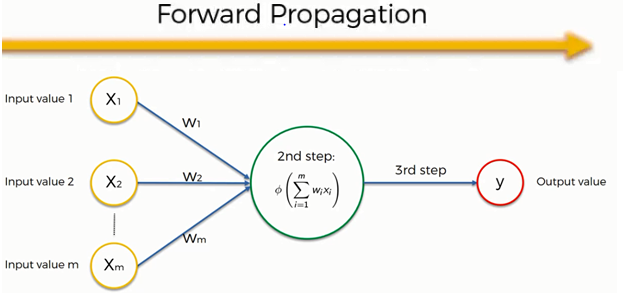

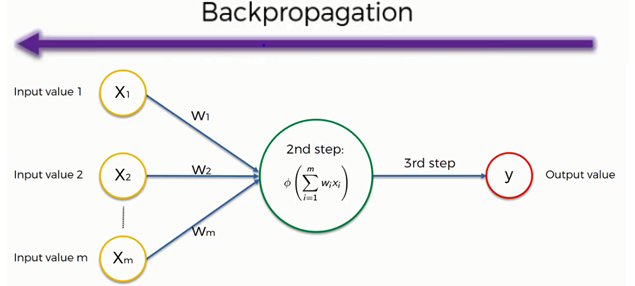

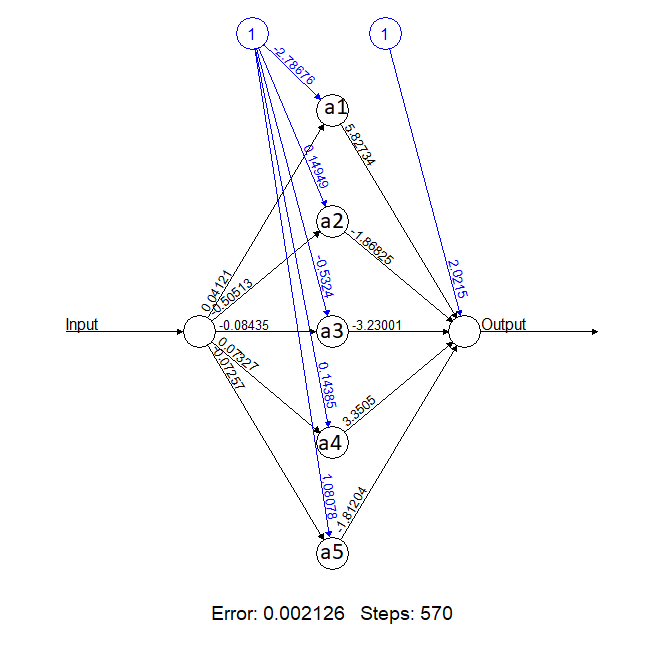

class: title-slide .row[ .col-7[ .title[ # Neural Networks ] .subtitle[ ] .author[ ### Laxmikant Soni <br> [Web-Site](https://laxmikants.github.io) <br> [<i class="fab fa-github"></i>](https://github.com/laxmiaknts) [<i class="fab fa-twitter"></i>](https://twitter.com/laxmikantsoni09) ] .affiliation[ ] ] .col-5[ .logo[ <!-- --> ] ] ] --- class: inverse, center, middle # Understanding Neural Networks --- class: body # Neural Networks Intuition <hr> -- .pull-top[ <font size="6"> * Developer looks for bugs in the code. * Ran many test cases by changing the inputs and look for the output. * then change in output provides hint on where to look for the bug which module to check, which lines to read. * Once we find it we make the changes and the exercise continues until we have the right code/application. </font> ] <hr> > Artificial Neural Networks works in the similar way, in which error is minimized by changing input weights and biases --- class: body # Perceptron and Neuron <hr> -- .pull-top[ <font size="6"> * A perceptron is a unit that takes multiple inputs and produces an output. It performs some transformation to inputs. * A neuron performs non-linear transformation to the inputs * Input and outputs can be combined in various ways. * By directly combining the input and computing the output based on a threshold value * By adding weights to the inputs * By adding additional bias along with the weights to the inputs </font> ] <hr> --- class: body # Weighted Summation <hr> -- .pull-left[ <!-- --> ] -- .pull-right[ <font size="6"> * w1 ,w2 , w3 are the weights of the connections * x1 , x2 , x3 are the input values * A neuron unit computes the summation of the weights multiplied by input values and adds a bias </font> -- ] <hr> --- class: body # Activation Function <hr> -- .pull-left[ <!-- --> ] -- .pull-right[ <font size="6"> * Activation Function takes the sum of weighted input (w1*x1 + w2*x2 + w3*x3 + 1*b) as an argument and returns the output of the neuron. * the activation function is mostly used to make a non-linear transformation that allows us to fit nonlinear hypotheses or to estimate the complex functions * Sigmoid, Tanh, ReLu </font> -- ] <hr> --- class: body # Forward Propagation <hr> -- .pull-left[ <!-- --> ] -- .pull-right[ <font size="5"> * When information is propagated from input layer to hidden layers, where after summarizing the input variables we apply an activation function and which finally result into output cost function is known as Forward Propagation. * Once we get the final output and we compute the cost function which results into error or difference between actual and predicted values. </font> -- ] <hr> --- class: body # Backward Propagation and Epoch <hr> -- .pull-left[ <!-- --> ] -- .pull-right[ <font size="5"> * The process, where error are propagated back to input layer through hidden layer to adjust the weights is called Back Propagation. * Back-propagation (BP) algorithms work by determining the loss (or error) at the output and then propagating it back into the network. The weights are updated to minimize the error. * This one round of forwarding and backpropagation iteration is known as one training iteration aka Epoch. </font> -- ] <hr> --- class: body # Full Batch Gradient Descent vs Stochastic Gradient Descent <hr> -- .pull-top[ <font size="5"> * Both variants of Gradient Descent perform the same work of updating the weights of the MLP by using the same updating algorithm but the difference lies in the number of training samples used to update the weights and biases. * Full Batch Gradient Descent Algorithm uses all the training data points to update each of the weights * Stochastic Gradient uses 1 or more(sample) but never the entire training data to update the weights once. * Full Batch: You use 10 data points (entire training data) and calculate the change in w1 (Δw1) and change in w2(Δw2) and update w1 and w2. * SGD: You use 1st data point and calculate the change in w1 (Δw1) and change in w2(Δw2) and update w1 and w2. Next, when you use 2nd data point, you will work on the updated weights </font> ] <hr> --- class: body # Algorithm <hr> -- .pull-top[ <font size="5"> * Step1: Randomly initialize the weights to small values close to zero (but not equal to zero) * Step2: Pass first observation of the dataset through ANN algorithm * Step3: Forward Propagation: In which information is passed through neural network algorithm starting from input layer till the computation of cost function * Step4: Compare the predicted value and actual value to find out the difference or error * Step5: Back Propagation: In the step error are propagated back to the input layer to adjust the weights. The learning rate decides how fast we get the minimum cost function * Step6: Repeat Step1-Step5 till we get the minimum value of cost function with optimized value of weights for each of the input variable </font> ] <hr> --- class: body # Simple implementation of Neural Network in R <hr> -- .pull-left[ ```python library(neuralnet) traininginput = (1:3)^2 trainingoutput = sqrt(traininginput) trainingdata = cbind(traininginput,trainingoutput) colnames(trainingdata) <- c("Input","Output") net.sqrt <- neuralnet(Output~Input,trainingdata, hidden=5, threshold=0.01) plot(net.sqrt) ``` ] -- .pull-right[ <!-- --> -- ] <hr> --- --- class: body # Simple implementation of Neural Network in Python <hr> -- .pull-left[ ```python from keras.models import Sequential from keras.layers import Dense from keras import optimizers X = [1,4,9,16,25] y = [1,2,3,4,5] model = Sequential() model.add(Dense(6, input_dim=1, activation='relu')) model.add(Dense(1, activation='linear')) opt = optimizers.Adam(learning_rate=0.01) model.compile(loss='mean_squared_error', optimizer=opt, metrics=['accuracy']) model.fit(X, y, epochs=200, batch_size=10, verbose=0) predicitions = model.predict(X) scores = model.evaluate(X, y) for i in range(5): print('%s => %.2f (expected %.2f)' % (X[i], predicitions[i], y[i])) ``` ] -- .pull-right[ 1/1 [==============================] - 0s 2ms/step - loss: 0.0453 - accuracy: 0.2000 1 => 1.28 (expected 1.00) 4 => 1.96 (expected 2.00) 9 => 2.74 (expected 3.00) 16 => 3.82 (expected 4.00) 25 => 5.21 (expected 5.00) -- ] <hr> --- class: inverse, center, middle # Thanks ---